Summary: Breaking a product down into separate stages of engagement allows for clearer focus on specific jobs to be done.

Symposia has been a fun project. It’s a standard conference calling tool similar to GoToMeeting, WebEx and ÜberConference, but has the unique added value to record the call and tie all notes back to the specific moment of the conversation. Participants can review the call by navigating the one-dimensional audio stream in two-dimensional visual space. Another way to say it is Symposia adds bulleted lists, colors, boldness, and margin to a dull MP3 file. You can now skim recorded phone conversations like you would a memo or Google search results.

We designed around the job of once in a while trying to remember a specific conversation with colleagues or customers.

This novel approach has been valuable to some. It’s like Gmail: when you need to find a specific message amongst your thousands of threads, you’re really glad it’s there. But like Gmail, it turns out the review action is seldom used relatively speaking to other features. We still live in a transport world, and users will conduct 10+ calls before they review one. Not unlike composing 10+ messages or replies in Gmail before you search for that missing receipt.

This insight led us to re-examine the flows for creating and hosting conference calls. Although not our core value nor differentiator, if these flows are primarily what our users interact with every day, we need to be sure the design is optimal.

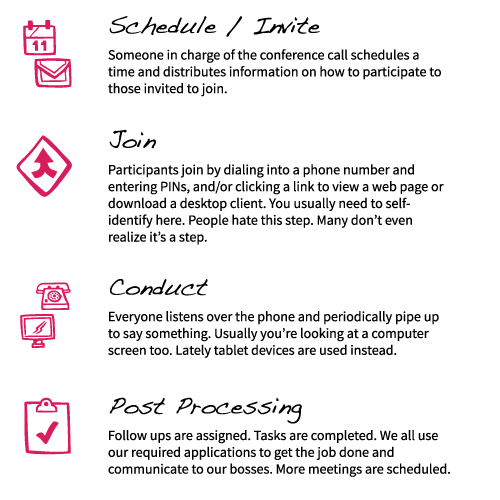

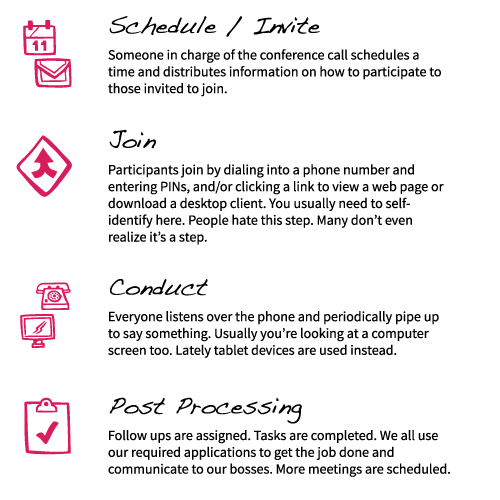

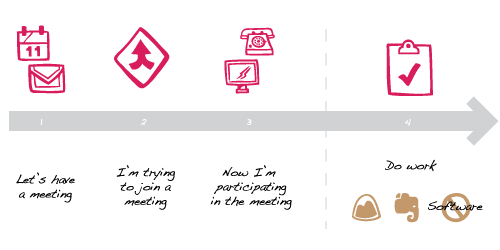

I observed a key pattern during this re-examination. There are four distinct stages to a conference call: Schedule, Join, Conduct, Post-Process.

Most people use another tool for stage one, like a calendar application, email, or unstructured communication (instant message). Scheduling is a hassle and often the source of wasted time. Key outcomes are syncing time zones, providing local international dial-in numbers, and the right call to action on how to join.

Joining is the second stage and is over looked by almost every vendor. I find this ironic because it’s also the single largest source of frustration with conference calls. Take a moment to contemplate everything that can go wrong:

- Phone number and join link is old because the host had to recreate the meeting or accidentally created multiple meetings.

- Participant doesn’t have a local dial-in number.

- People generally need to download something (we’ve all used WebEx or GoToMeeting, right?), and the process is slow. Plus often the systems are problematic. IT administrators may prevent the downloading of a Java client, or lock down an IP range.

- People are late. People arrive early.

- Someone misses the call entirely.

- Participants might need to remember authentication, and who likes to remember usernames/passwords?

It’s a full process unto itself and deserving of a separate stage. I find it surprising and exhausting how poorly this stage is designed, resulting in calls starting out negatively before anyone even speaks. People tend to be in a bad mood entering a conversation because of this stage.

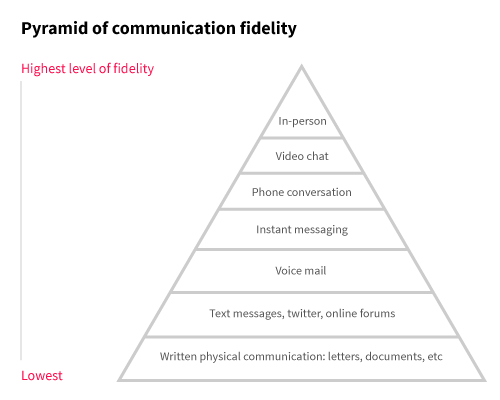

The third stage is the actual meeting. Other products tend to focus on this stage. You got interactive white boards, call management widgets, screen sharing, social media plugins, LinkedIn profiles, liking-sharing-posting-chatting-talking … you name it. All in the name of making the call “more useful” by making it more interactive. Sometimes I feel it’s actually making a conversation more distracting. Many users resent the tools found during this stage.

The fourth stage is a never-ending ray extending out from the moment the call ends. Products tend to not associate this stage with a conference calling application, but it is a vital portion. Users create follow ups, assign tasks, share files, and log information in systems—like a CRM, ATS, or Basecamp.

I see this stage as the connector to other jobs participants need to do. The fourth stage is a launching point to other actions. However, it is important to remember Des Traynor’s point about staying true to what your product is meant to do. You don’t want to extend beyond that line. For example, it’s difficult sometimes to remember that Symposia is not a calendar scheduling tool or meant to be a framework for to-do’s, but is a conference calling application.

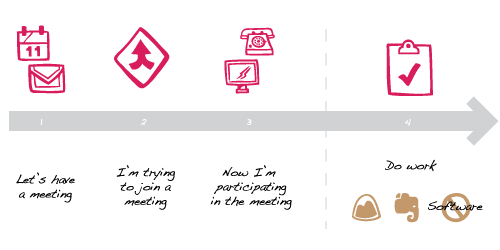

It has helped me to think of a conference call like a single line that has a beginning but no ending, then cutting that line into four sections.

I see the Post-Processing phase as the most fertile area for innovation. Users might come back to the call at a later point. They might need to extract context to a follow up. We have yet to fully understand the full value, but the possibilities for aiding specific jobs to be done are rich.

It has helped us to think about a conference call in four stages. Addressing each stage and targetting features and flows that focus on enabling the successful completion of the job to be done within those stages has made the product more useful overall.